Docker vs Solaris Containers (tldr; it's a question of kernel support, not application)

Containers feel a bit like the elusive null packet.

When reading & learning about Linux containers (cgroups), Docker containers, and containers in general, it's quick to realise that just like null packets, in concept "they are perfect in their simplicity and they are very true".

Yet, achieving simplicity is hard damn it and at the same time just like "Many experts naively confuse the Null Packet with an Imaginary Packet" many experts appear to confuse kernel level support for multi tenant with containers stuck inside virtual machines.

Below is a summary of many posts (mostly by Joyent) on the topic.

Readings:

https://www.joyent.com/blog/dockers-killer-feature (December 02, 2014)

https://www.joyent.com/blog/the-seven-characteristics-of-container-native-infrastructure (March 11, 2015 )

Summary: Native containers means container hypervisor performs the resource managements, and importantly, these architectures require operating systems with kernel level support if you want true, tested separation (full separate IP stacks, port mapping etc).

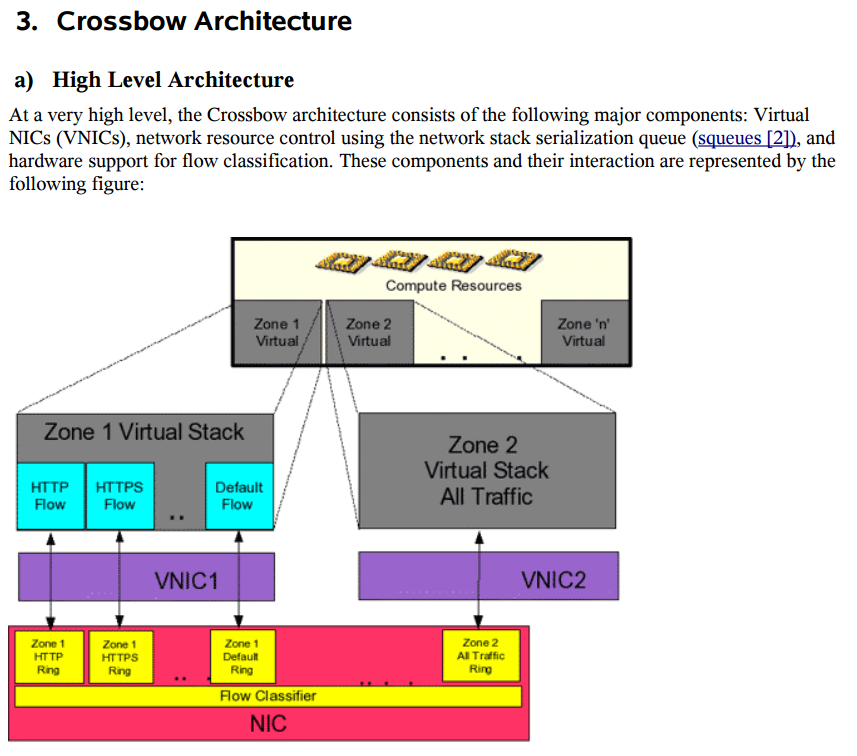

For more information on how fully visualised IP stacks are created read about the OpenSolaris Crossbow project which provides Virtual Network Interface Cards (VNIC) and the Crossbow Architecture Overview (pdf).

The goal of the project Crossbow is to different virtual machines share the common NIC in a fair

manner and allow system administrators to set preferential policies where necessary (e.g. the ISP

selling limited B/W on a common pipe) without any performance impact.

https://www.joyent.com/blog/the-foundation-of-cloud-native-computing

Summary: Basically: ~"Foundations aren't so bad after-all, maybe they help preserve engineering values" &, there's an effort to standardise the containers interfaces. See: https://www.opencontainers.org/

https://www.joyent.com/blog/scaling-docker-deployments-from-laptop-to-cloud (July 22, 2015)

Summary: Containers on Linux isn't a real solution, but it has a great footprint. Lets abstract the Linux system call table in SmartOS! &: Dockers great, but because its (most commonly?) installed upon Linux it's not possible to have true privilege separation like Solaris Containers can (Linux's cgroups only go so far, so Docker suffers). 'Legacy' cloud providers get round this by wrapping containers in VMs, undoing a lot of the benefits containerisation in the first place (ie multi-tenant, bare metal performance) and the hypervisor then has to visualise the networking between vms adding further performance hits. All these plasters add latency when trying to run docker within a VM. This affects cost legacy providers charge for instances: the VMs you're cloud provider is running in order to achieve privilege separation between containers, if they built upon SmartOS / Solaris / any kernel that supports the seperations needed, there's be no need for the VM.

You're not paying for an application you're paying for instances. Look out for words like 'cluster' and 'instances' and seek clarification. A legacy provider is probably using VMs as the stop-gap for container separation.

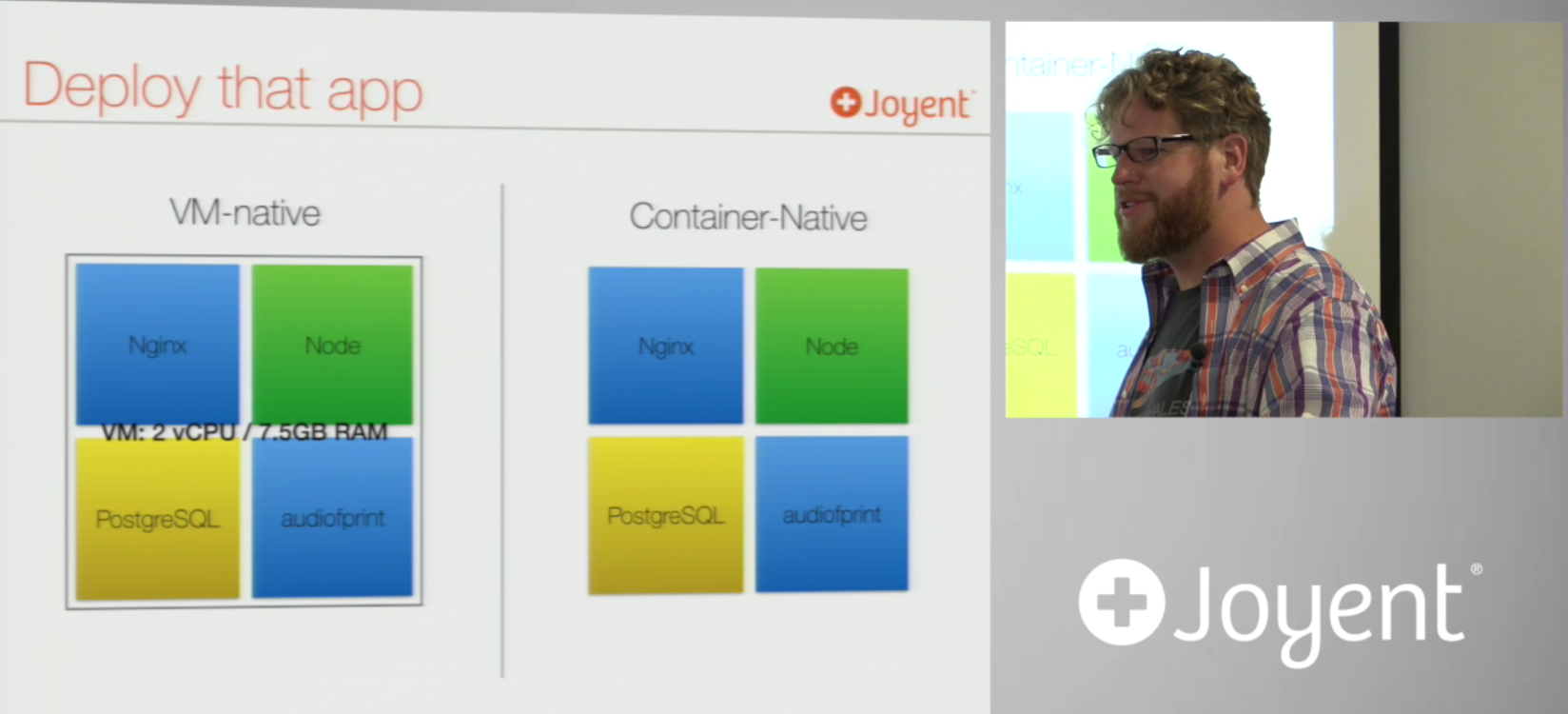

tldr; VM-Based container providers leave your instances contained within the VM inside which they run. e.g you might have a simple LAMP stack, you buy a VM with 2 vCPUs, 7.gGB RAM and run four containers within it. The VM is your ceiling:

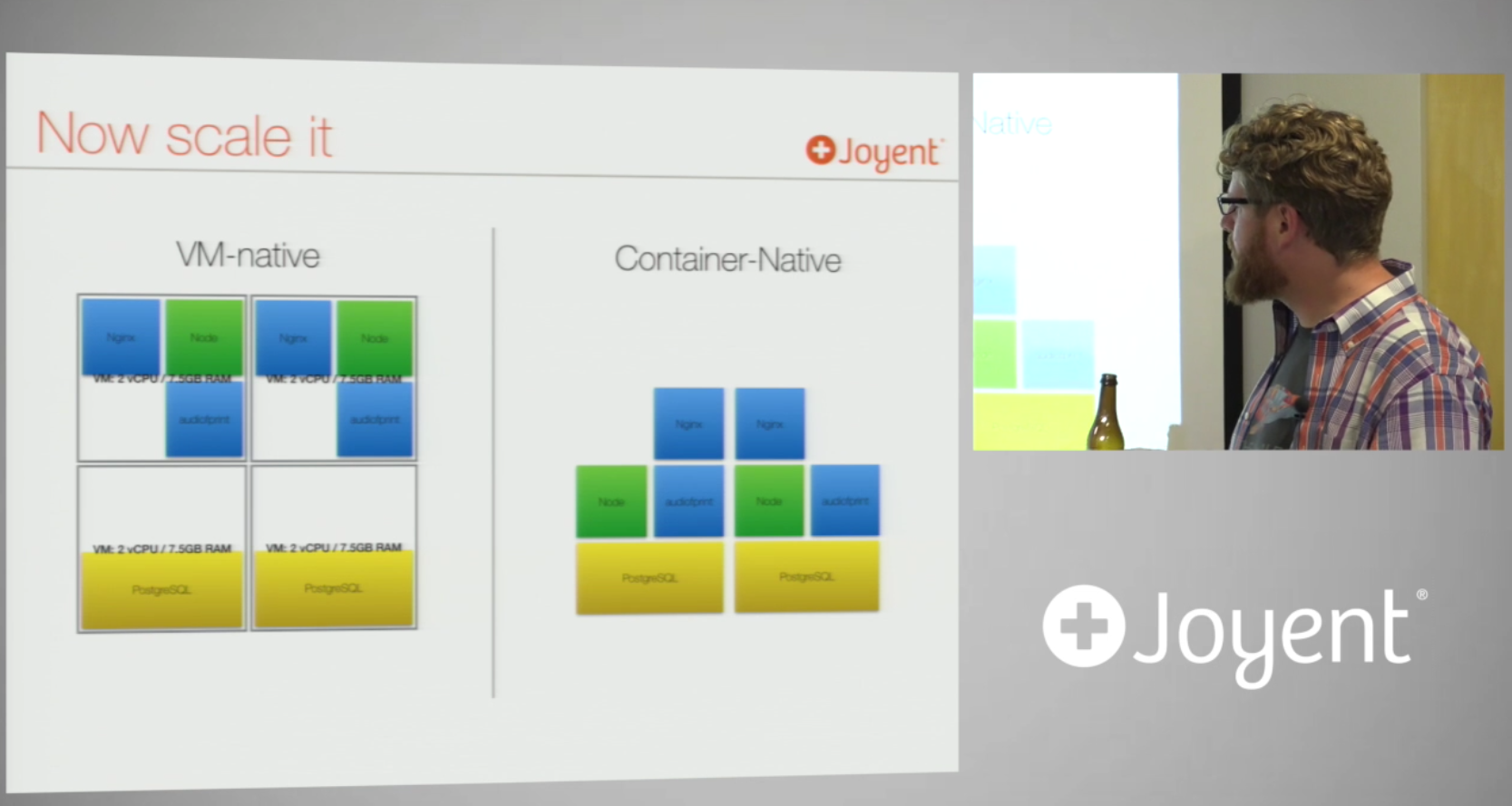

OK not entirely, because you can replicate your VMs, but this creates wastage: You're still paying for all the VMs you scale to, even if you only needed the extra resources for part of the service/application:

"Everything in Joyents cloud is local disk", they don't use network attached storage because of the performance bottlenecks it creates. Also, because the containers are stored on local disk directly, data persistence is easier because the container instances are not contained within VMs anymore.

From laptop to deploying to the cloud

Things to note:

Joyent does not run the docker binary, it simply implements the Docker API and translates those Docker API calls into its private api calls which provision the containers. When interacting with the Docker API on laptop -> Joyent Cloud your ssh key never gets sent to Triton, it just gets turned into (~used) as a TLS certificate for identification.

Related build script from the talk: https://github.com/misterbisson/clustered-couchbase-in-containers/blob/master/start.sh. Note, this approach has developed a lot since that talk and evolved into the 'autopilot pattern' but it's still interesting to see these old simpler scripts and to how it has evolved into Autopolot: http://autopilotpattern.io/example

It's all pretty cool & makes a lot of sense.

https://www.joyent.com/blog/why-container-security-is-critical (October 06, 2015)

Summary: The immaturity of Linux containers is a risk, often plastered over by using multiple containers inside a VM. This adds cost and complexity tying the lifecycle of the container to the VM. Being inside a VM makes data persistence (inside containers) a challenge. With container native you get the benefits of bare metal performance, with the security of a multi-tenant kernel with over 10 years maturity.

https://www.joyent.com/blog/bringing-clarity-to-containers (December 17, 2015)

Summary: The containers market can be confusing. It's its early days, standards are emerging, may ultimately lead to interchangeability.

http://containersummit.io/events/nyc-2016/videos/the-evolving-container-ecosystem

https://www.joyent.com/blog/running-node-js-in-containers-with-containerpilot

Still to read:

http://dtrace.org/blogs/brendan/2013/01/11/virtualization-performance-zones-kvm-xen/