Backup a server to gcloud storage using rclone / rsync

Install rclone on the source server:

curl https://rclone.org/install.sh | sudo bash

The rest of the guide will walk through:

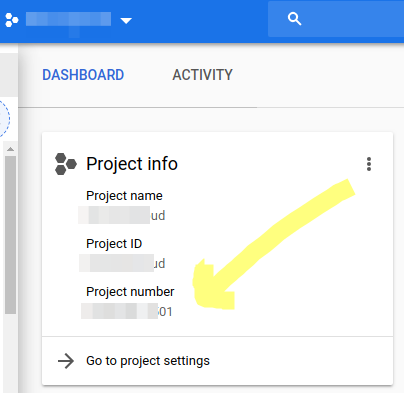

- Create a Google Storage project, get it's project number.

- Create a storage bucket in that account

- Run

rclone configtelling it to use Google Cloud storage, and the project number

Create Project

After creating a project workspace in the Google Cloud Console you can get the project number:

Create a bucket using the Google Cloud Console, and choose which storage type you want (e.g. codline storage is the cheapest for rarely accessed data, e.g. disaster recovery).

Configure rclone

Run rclone config

This will ask you to name your remote, and what type of remote it is (in our case Google Stroage), and use the project number you found in you Google Console.

Authentication

You'll get asked to allow rclone access to your Google Cloud stage, if you're on a server press "N" and pate the given url into your browser on your local machine to authorise acces. This will generate an auth code to paste back into the rclone config proceedure.

Specify which directories to backup

Crea te file, e.g include-from.txt in your /root directory. We're going to backup as much as possible (setting, programes, user data etc):

vi /root/include-from.txt:

/etc/**

/var/**

/usr/**

/local/**

/opt/**

/home/**

/root/**

See rclone filter rules for more information on why we use ** (which means also match the / in paths, so traversing directories.

Perform the backup

The following will perform a copy backup from your server to your Google Cloud storage, using the include-from.txt rules above.

To check what it will copy before running the script for real, add the --dry-run option. When ready:

sudo rclone copy -L --include-from /root/include-from.txt --local-no-check-updated / <remote-name>:<bucket-name>

-

Where

<remote-name>is the name you gave when runningrclone config. If you've forgotten, runrclone listremotesto get the name -

<bucket-name>is the name you created on Google cloud storage -

By default this will put the backup into the

rootof your Google Storage bucket, if you want your backup to go into a subdirectory within your storage bucket, first create the directory using the Google Cloud Console, and then append it to your backup commang. e.g:sudo rclone copy -L --include-from /root/include-from.txt --local-no-check-updated / <remote-name>:<bucket-name>/2019-backup

Would attempt to put your backup in a folder called 2019-backup within your storage bucket.

Automate

Add your backup command to your crontab.

crontab -e