Using systemd-nspawn containers with publicly routable ips (IPv6 and IPv4) via bridged mode for high density testing whilst balancing tenant isolation

If you're tight for time and want to build this right away see the "Tutorial" heading for how to setup systemd-nspawn with bridge mode and public ip addressing.There's also an accompanying systemd-nspawn repo with a scripted process to deploy everything in this article automatically:

Disclaimer: Lennart Poettering specifically stated that systemd-nspawn was conceived for only testing your software which has networking components, and what we're doing here might be considered misuse. However, Poettering said that 9 years ago, and things change. Today, systemd-nspawn is being used for more dependable workloads.

- The first part of this article is focused on

IPv4because I want to get that clearer in my own mind first. - However,

IPv6is the far more natural fit because when you buy/rent a server you typically get an IPv6/60allocated to you for free (cost savings) which gives you295,147,905,179,352,825,856globally routable addresses. What's more, this entire public subnet is already routed to your server* making route configuration simpler compared toIPv4where you have to battle with ARP, promiscuous mode, and port security much more.

*I'm not suggesting you try to run 295,147,905,179,352,825,856 services on a single host- please try though and get back to me!

I've previously written about IPv6 becoming more commonplace:

Why now?

TLDR: Hermetic/usr/is awesome; let's popularize image-based OSes with modernized security properties built around immutability, SecureBoot, TPM2, adaptability, auto-updating, factory reset, uniformity – built from traditional distribution packages, but deployed via images.

- Lennart Poettering, 'Fitting Everything Together' May 2022

- See also counter views on the topic at https://lwn.net/Articles/894396/

For an overview of systemd generally see: NYLUG Presents: Lennart Poettering -on- Systemd:

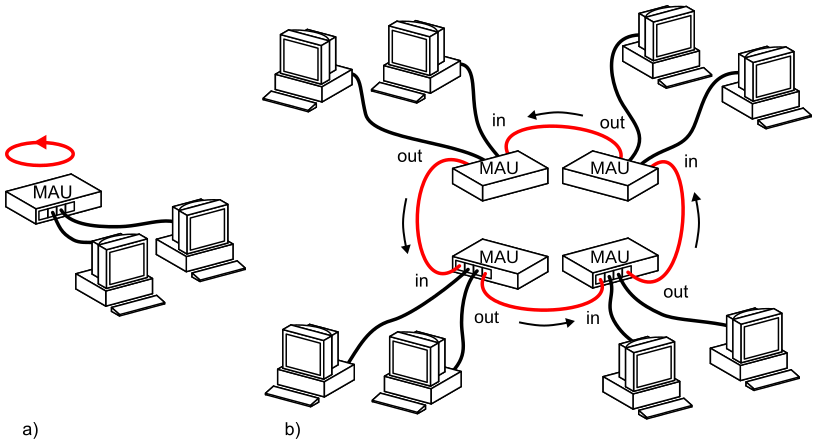

What we're building

- Multi-tenancy: One sever, running

nnumber of light-weight containers, each with their own publicly routable address- this is key, to the outside world each container looks like an ordinary host - Each light-weight container can run any application it chooses (we're using a Debian 11 install for each container)

- Every container has some isolation promises**

From a network perspective to the outside world, each container will be globally reachable since each container gets its own public IP address assigned***.

This is decisively not IP Masquerading where you only have one src IP address translated to multiple internal IPs, we don't want that- we want publicly addressable endpoints. This is decisively not NAT.

No NAT? This sounds really insecure?

NAT != Firewall. The goals of this article are to explore systemd-nspawn. See 'The goals of this project'.

** Whilst remembering that promises may be broken

***Again, this is why IPv6 makes sense here but I digress- sticking with IPv4 for now).

Why? The goals of this project are:

- Maximising frugality

- Not sacrificing reachability - every service must be directly contactable via its public ip address - yes really, no SNAT/DNAT masquarding here

- Reproducibility - In the 'DevOps' world we're very familiar with infrastructure as code (buzzword for: I can audit, change, and rebuild my application workloads easily if I have to). The same is useful for say, testing network topology generation

What's the use case?

I'm quite keen to spin up / tear down test k0s clusters ( k0s is a lightweight Kubenetes which is easy to install, which needs at least 3 nodes to be useful). With nspawn + ip addressing, frugal ephemeral clusters become possible.

- But you could literally use nspawn containers for anything- Go for it. Anything which runs and binds to an address + port can be ran from nspawn containers. Want to run a database? Apache? an entire stack?

- nspawn containers support the "

Ephemeral" setting which "If enabled, the container is run with a temporary snapshot of its file system that is removed immediately when the container terminates." (docs)

Tutorial: How to setup systemd-nspawn bridge mode

The high-level steps are:

- Create a Debian 11 Virtual Private Server (VPS)

2. Install systemd-nspawn and create a machine (aka lightweight container)

3. Configure bridged networking from external -> host -> container(s) (the tricky bit)

We'll also be creating a floating IPv4 address and assigning that IP to our VPS, these instructions are for Hetzner but the concepts are the same regardless of your provider choices.

Creating the nspawn Debian container image

- First you must create a Debian 11 Hetzner Virtual Private server (note this is a referral link, you don't have to create a Hetzner vm, but all these instructions, assumes you have especially the floating ip part).

- Then follow instructions below to install

systemd-nspawnand a few (optional) helper utilities for debugging (`tmux`,tracetoure,vim). Noteqemu-guest-agentis absolutely not needed, it's helpful on Hetzner for resetting your password via console if you get locked-out, though.

SSH into your container and start setting up systemd-nspawn:

apt update

apt install -y systemd-container debootstrap bridge-utils tmux telnet traceroute vim qemu-guest-agent

echo "set mouse=" > ~/.vimrc

echo 'kernel.unprivileged_userns_clone=1' >/etc/sysctl.d/nspawn.conf

# Permit ip forwarding

sed -i 's/#net.ipv4.ip_forward=1/net.ipv4.ip_forward=1/g' /etc/sysctl.conf | grep 'forward'

echo 1 > /proc/sys/net/ipv4/ip_forward

sysctl -p

mkdir -p /etc/systemd/nspawnCreate a Debian nspawn container using debootstrap

debootstrap --include=systemd,dbus,traceroute,telnet,curl stable /var/lib/machines/debian

dbus is needed so that you can login to your guest from the host

Login to your guest container, set password and configure tty

Login to your container:

systemd-nspawn -D /var/lib/machines/debian -U --machine debian

Spawning container buster on /var/lib/machines/debian.

Press ^] three times within 1s to kill container.

Selected user namespace base 818610176 and range 65536.

Sidenote: the extremely high user id (uid) and user namespace. This is a systemd concept called "Dynamic User" for greater isolation which builds upon traditional *nix ownership and isolation models.

- If you're interested see

talk: "NYLUG Presents: Lennart Poettering -on- Systemd in 2018- Dynamic user chapter" or

text: Dynamic Users with systemd

The above will drop you inside your guest Debian container. Now still inside the guest shell configure a password:

# set root password

root@debian:~# passwd

New password:

Retype new password:

passwd: password updated successfully

# allow login via local tty # may not be needed any more

# 'pts/0' seems to be used when doing systemd-nspawn --boot, 'pts/1' with machinectl login

root@debian:~# printf 'pts/0\npts/1\n' >> /etc/securetty

# logout from container

root@debian:~# logout

Container debian exited successfully.It can be confusing to know if you're inside or outside of the guest (unless you change the container hostname).

A quick way to know if your inside a guest or at the host is to:

- Try and exit by pressingCtrl + ]]](Control key and right square bracket three time)

-ls /devInside a guest, since permissions are limited you won't see all/devdevices exposed in your container

- set a hostname for your container

On the host, create an /etc/systemd/nspawn/debian.nspawn config file for your container

Here we configure the guest container to connect to an existing bridge interface (we've not created it yet). We only need these two lines for a minimal nspawn container in bridged mode:

vim /etc/systemd/nspawn/debian.nspawn

[Network]

Bridge=br0Create host network configuration

Before we start here's an ip command primer of useful shortcuts I found helpful:

ip -c address(the-cwill give you coloured output- easier to see 👀)ip ais the same asip address(save your fingers!)

Remove existing automatic cloud config:

Your VPS came with automatic address configuration which needs removing.

rm /etc/network/interfaces.d/50-cloud-init

Create a new network config with a bridge interface for eth0:

Assumptions:

Here we'll be using the following example IP addresses, yours of course will be mostly be different, and we highlight where not.

- Your VPS public ip address is:

203.0.113.1/32(yours will be different) - Your Hetzner gateway is:

172.31.1.1(you don't want to change this, its Hetzners reserved IP address for the gateway. Hetzner FAQ - You have created and assigned a floating IP address to your VPS (docs)

- e.g.

203.0.113.2/32(yours will be different) - You have not assigned the floating ip to your interface

eth0(ignore Hetzners example, don't assign your floating ip address toeth0.

- e.g.

Edit your host: vim /etc/network/interfaces

# The loopback network interface

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 203.0.113.1

netmask 255.255.255.255

pointtopoint 172.31.1.1

gateway 172.31.1.1

dns-nameservers 185.12.64.1 185.12.64.2

#Bridge setup

auto br0

iface br0 inet static

bridge_ports none

bridge_stp off

bridge_fd 1

pre-up brctl addbr br0

up ip route add 203.0.113.2/32 dev br0

down ip route del 203.0.113.2/32 dev br0

address 203.0.113.1

netmask 255.255.255.255

dns-nameservers 185.12.64.1 185.12.64.2You can manually bring up the interfaces by doing:

ip link set dev eth0 up

ifup br0You can view interface statuses by the following (don't worry if br0 is down at the moment).

ip -c link

ip -c addr-c gives you coloured output ! 🌈)Help I'm locked out my server after configuring a bridge!

Playing with bridges with only 1 Ethernet interface is a great way to accidentally lock yourself our of your server.

Thankfully it's not hard to get back, use the console view (or equivalent on your provider) to directly login to your server and reset the networking if you need to (this is why we installed qemu-guest-agent earlier since you can 'rescue' and change the password from the console with the agent installed- not without.

Reboot and verify you're still able to ssh into your server

After a reboot, your interfaces (at least `eth0`) should come up and you'll still be able to ssh into your server.

Start your container and assign the public ip address to it

Starting your nspawn container is simple:

systemctl start systemd-nspawn@debian.service

# Enable container to automatically start upon reboot, if you like

systemctl enable systemd-nspawn@debian.serviceSince nspawn containers are just another systemd service, you can usesystemctl status systemd-nspawn@debian.serviceto inspect, and evenjournalctlfor logs.

See the container running with machinectl list --full:

machinectl list --full

MACHINE CLASS SERVICE OS VERSION ADDRESSES

debian container systemd-nspawn debian 11 203.0.113.2

1 machines listed.

You won't have any ip address listed yet- let's do that next! We're going to

- login to your guest container (with

machinectl login) - Assign the container your floating ip address (using

ip) - Configure routing so that routes work from your VPS host -> container and back again

Login to your debian container, assign IP & configure routing

Logging into the guest container called debian:

machinectl login debian

Connected to machine debian. Press ^] three times within 1s to exit session.

Debian GNU/Linux 11 debian-guest pts/1

debian-guest login: root

Password:

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Fri Feb 3 22:26:43 UTC 2023 on pts/1

root@debian-guest:~#Configuring an ip address for the nspawn guest container

Because we have an /etc/systemd/nspawn/debian.nspawn file which specifies "Bridge=br0", when you started your container, systemd performed some automatic bridge configuration for you.

Still in your guest container run: ip -c link: Note that systemd has created a host0 interface for you automatically.

# ip -c link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: host0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

Now log out of your guest and run: ip -c link on your VPS host from outside of your guest:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

2: eth0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

3: br0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

6: vb-debian@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UP mode DEFAULT group default qlen 1000

You'll see a new interface has been created by systemd called vb-debian@if2 which binds one network namespace to your host, and the other to another interface created in your guest container. This is explained in detail in the systemd-nspawn network-bridge docs.

Login to your guest again & configure it with the floating ip address

The floating ip address is to simulate having lots of available IP addresses (again, this is more suited to IPv6 but this still works in IPv4 with some effort).

# Login with your guest user root and password

machinectl login debian

# Set an ip address on the

ip addr add 203.0.113.2/32 dev host0

ip link set host0 up

# Verify host0 interface is up

ip -c link

The above logs you back into the guest called debian, and adds an IP address (the floating ip address) to the host0 interface, which was created by systemd-nspawn and connects to our br0 bridge on the host.

Note that can't ping out host or reach the outside world from your container yet: 🥹

# Try to ping the host ip from the guest

# Remember that 203.0.113.1 is the example IP address of the VPS eth0

# yours will be different:

root@debian:~# ping 203.0.113.1

ping: connect: Network is unreachable

# Try to ping google from the guest

root@debian:~# ping 8.8.8.8

ping: connect: Network is unreachableThat's partly because the guests default routing table is empty:

ip route show default

# nothing 🥹Add default gateway from container to host

In order for the nspawn guest container to reach the host

ip route add default dev host0Now we can ping the host ip from inside our guest! 🚀:

root@debian:~# ping 203.0.113.1

PING 203.0.113.1 (203.0.113.1) 56(84) bytes of data.

64 bytes from 203.0.113.1: icmp_seq=1 ttl=64 time=0.135 ms

64 bytes from 203.0.113.1: icmp_seq=2 ttl=64 time=0.057 ms

64 bytes from 203.0.113.1: icmp_seq=3 ttl=64 time=0.071 ms

^C

--- 203.0.113.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2030ms

rtt min/avg/max/mdev = 0.057/0.087/0.135/0.033 ms

..and we can ping from our host -> to our floating ip which is assigned to our guest container:

# Logout of container

logout

# Back in main host:

root@host:~# ping 203.0.113.2

PING 203.0.113.2 (203.0.113.2) 56(84) bytes of data.

64 bytes from 203.0.113.2: icmp_seq=1 ttl=64 time=0.110 ms

64 bytes from 203.0.113.2: icmp_seq=2 ttl=64 time=0.061 ms

^C

--- 203.0.113.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1020ms

rtt min/avg/max/mdev = 0.061/0.085/0.110/0.024 ms

However, we sill can't reach external internet from our container. (log back into your container machinectl login debian and try to ping 8.8.8.8: 🥹 so close!

root@debian-test3:~# ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

From icmp_seq=1 Destination Host Unreachable

From icmp_seq=2 Destination Host Unreachable

From icmp_seq=3 Destination Host Unreachable

^C

--- 8.8.8.8 ping statistics ---

4 packets transmitted, 0 received, +3 errors, 100% packet loss, time 3068ms

pipe 4

Configure proxy_arp to allow eth0 to reply to arp requests for ip address(es) on the guest interface host0

At this point, when you try to ping your floating IP externally (e.g. your own internet connection) you cannot ping, and will see "Destination Host Unreachable". This is because proxy_arp is turned off.

If you're not interested in why this is the case, simply turn onproxy_arpon your host with:

echo 1 > /proc/sys/net/ipv4/conf/eth0/proxy_arp

Explanation of whyproxy_arpis needed is below.

Since arp requests will be coming from your cloud providers switch to the 'physical' eth0, your server needs to reply to arp requests come the gateway, arriving at our host:

tcpdump -n -i eth0 -e arp

11:19:32.767498 d2:74:7f:6e:37:e3 > ff:ff:ff:ff:ff:ff, ethertype ARP (0x0806), length 42: Request who-has 203.0.113.2 tell 172.31.1.1, length 28

11:19:33.785587 d2:74:7f:6e:37:e3 > ff:ff:ff:ff:ff:ff, ethertype ARP (0x0806), length 42: Request who-has 203.0.113.2 tell 172.31.1.1, length 28

11:19:34.809684 d2:74:7f:6e:37:e3 > ff:ff:ff:ff:ff:ff, ethertype ARP (0x0806), length 42: Request who-has 203.0.113.2 tell 172.31.1.1, length 28Destination Host Unreachable when we try to ping our floating IP - they gateways ARP table for 203.0.113.2 has no entry because our host is not replying to the ARP request.Above, we see Hetzner's gateway ip (172.31.1.1) endlessly sending ARP requests to our host which are currently not being answered at all. That is because our floating IP address is not assigned to eth0 (and shouldn't be).

- We've associated (but not assigned) our floating IP to the VPS server, so the gateway is trying to populate its ARP table ✅

- We've intentionally not assigned our floating ip address to our

eth0, which is whyarprequests from the gateway are going unanswered

And sure enough, our host's ARP table has no entry for either, only the gateway:

ip neigh

172.31.1.1 dev eth0 lladdr d2:74:7f:6e:37:e3 REACHABLEip neigh is the more recent version of the arp commandSo to resolve the ARP requests not being replied to issue, we need to turn on proxy_arp for the eth0 interface:

echo 1 > /proc/sys/net/ipv4/conf/eth0/proxy_arpAfter which, finally ARP requests from the gateway are being replied to by our eth0 (even though our floating ip address is not directly assigned to that interface):

11:47:27.321712 d2:74:7f:6e:37:e3 > 96:00:01:e1:5c:65, ethertype ARP (0x0806), length 42: Request who-has 203.0.113.2 tell 172.31.1.1, length 28

11:47:27.321730 96:00:01:e1:5c:65 > d2:74:7f:6e:37:e3, ethertype ARP (0x0806), length 42: Reply 203.0.113.2 is-at 96:00:01:e1:5c:65, length 28eth0 interface is replying to ARP requests from the gatewayAnd also since now we have enabled proxy_arp on our eth0, the arp table for that interface also contains a reference to our floating ip 203.0.113.2 reachable on our bridge:

ip neigh

172.31.1.1 dev eth0 lladdr d2:74:7f:6e:37:e3 REACHABLE

203.0.113.2 dev br0 lladdr de:d4:27:20:44:78 REACHABLE

ARP table confuse- the core issue for the Destination Host Unreachable is that our Gateway's ARP requests were going un-answered. However, in a rented 'cloud' environment we can't see the ARP table of the provider- but we do know now that we've managed to insert our appropriate entry into it for our floating IP. (very important we do that for ours alone).Huzzah! Not so soon. Whilst we've resolved the Destination Host Unreachable issue, now when we ping our floating IP from an external connection, we get... silence*

ping 203.0.113.2

#.. nothingThankfully we can get some visibility into whats happening by using tcmpdump at our host- are ICMP pings are reaching our host. But why are they not being answered?

tcpdump -n -i eth0 -e icmp

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

11:59:32.010084 d2:74:7f:6e:37:e3 > 96:00:01:e1:5c:65, ethertype IPv4 (0x0800), length 98: 37.157.51.68 > 203.0.113.2: ICMP echo request, id 123, seq 12071, length 64

11:59:33.034072 d2:74:7f:6e:37:e3 > 96:00:01:e1:5c:65, ethertype IPv4 (0x0800), length 98: 37.157.51.68 > 203.0.113.2: ICMP echo request, id 123, seq 12072, length 64

11:59:34.057892 d2:74:7f:6e:37:e3 > 96:00:01:e1:5c:65, ethertype IPv4 (0x0800), length 98: 37.157.51.68 > 203.0.113.2: ICMP echo request, id 123, seq 12073, length 64

^C

3 packets captured

3 packets received by filter

0 packets dropped by kerneltcpdump of me pinging the floating up from my internet connection. We can see that the pings are reaching the host (perhaps not the guest?) and are not being replied to. 🥹..we need to configure the guests default gateway correctly, let's go! 🛠️Configure the guest default gateway so that the guest can speak to the world

tldr: If you want to see the steps to configure guests default gateway,

skip to:

"Configure the guest default gateway with the correct via route via hosts IP"

Explanation of why the guest default gateway is needed

At the moment, ICMP packets are reaching our host, and guest virtual Ethernet bridge link which systemd-nspawn kindly created for us**** , however neither our host/nor guest is replying to those ICMP packets.

As a reminder, whilst pinging our floating IP from an external IP (e.g. your personal internet connection) , we also use tcpdump to confirm that our host is receiving the ICMP requests, but not replying to them:

tcpdump -n -i eth0 -e icmp

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

11:59:32.010084 d2:74:7f:6e:37:e3 > 96:00:01:e1:5c:65, ethertype IPv4 (0x0800), length 98: 37.157.51.68 > 203.0.113.2: ICMP echo request, id 123, seq 12071, length 64So right now our guest can only ping its own IP (203.0.113.2), and the IP address of the host on the directly connected eth0 interface ( 203.0.113.1), and we can't ping out from our guest to the wider internet (e.g. google 8.8.8.8), and when we ping from our personal internet connection to our guest, neither can our guest reply back- this is a big hint that there may be a gateway issue:

# Currently our guest routing table is empty:

ip route show

# .. nothing

# (or contains invalid / bad defaults - delete them)We can confirm that our guest has no route to 8.8.8.8 with:

# Make sure you're in your guest

machinectl login debian

ip route get 8.8.8.8

RTNETLINK answers: Network is unreachable

What about SNAT/DNAT etc?- Not here! Remember our goals here are to have each container appear as though it is publicly routable (which it will be)

What we need to do is configure the guests default gateway so that the routing table of the systemd container knows where to send packets not on its own network, and critically which src IP to give those packet- the src IP of the packers from our guests must be our floating IP, not the hosts IP. In other words, we don't want to masquerade our guests IP address at all.

Configure the guest default gateway with the correct via route via hosts IP

# Logged in to your guest container:

machinectl login debian

# Delete any existing default route

ip route del default

# Add a static route to your host's IP

# This is the IP address assigned to your hosts eth0 interface

ip route add 203.0.113.1/32 dev host0

# Critically, now you can add a default route, which is mindful

# of your guest having to go via your host's IP for its default route

ip route add default via 203.0.113.1

# Validate you now have a route to 8.8.8.8

ip route get 8.8.8.8

8.8.8.8 via 203.0.113.1 dev host0 src 203.0.113.2 uid 0

cache The order of adding a static route to your hosts IP before the default route is critical here, if you try to add ip route add default via 203.0.113.1 first, then you'll get "Error: Nexthop has invalid gateway." because, quite rightly, the kernel complains there's no route for 203.0.113.1 yet.

You'll now be able to ping from your container to the wider internet, and vice versa you can ping your containers public IP address 🚀! What just happened?*****

On your guest you:

- Added a static root to your VPS's IP address

203.0.113.1, which is reachable from your guest over itshost0interface (ip route add 203.0.113.1 dev host0) - Added a default gateway to your guest, specifying that not only must gateway destined packets go over

host0, they have to go via the IP address of your host203.0.113.1. But wait! When your packets do this, they are still sourced with your containers IP

ip route get 8.8.8.8

8.8.8.8 via 203.0.113.1 dev host0 src 203.0.113.2 uid 0

cache Lets ping to confirm we can:

- Ping from inside our container to an external network

- Ping from the internet to our container

Confirm we can ping from inside our container to an external network (1)

root@debian:~# ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

64 bytes from 8.8.8.8: icmp_seq=1 ttl=57 time=9.08 ms

64 bytes from 8.8.8.8: icmp_seq=2 ttl=57 time=8.57 ms

64 bytes from 8.8.8.8: icmp_seq=3 ttl=57 time=8.49 ms

^C

--- 8.8.8.8 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2004ms

rtt min/avg/max/mdev = 8.492/8.713/9.082/0.262 ms

Confirm we can ping from the internet to our container (2)

$ ping 203.0.113.2

PING 203.0.113.2 (203.0.113.2) 56(84) bytes of data.

64 bytes from 203.0.113.2: icmp_seq=1 ttl=50 time=47.2 ms

64 bytes from 203.0.113.2: icmp_seq=2 ttl=50 time=49.1 ms

64 bytes from 203.0.113.2: icmp_seq=3 ttl=50 time=47.5 ms

^C

--- 203.0.113.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 47.181/47.942/49.111/0.838 ms

*****Jamie Prince sums up this gateway approach beautifully, we've simply extended to concept to the context of nspawn containers:

"Direct connection" can be part of a Layer 2 bridge, as I found, very useful for assigning spare public IPs to internal devices without having to resort to NAT nastiness and complexity.."

- Jamie Prince

Make network configuration static

At the moment we're having to manually perform the guest IP addresses using the IP command- but we don't need to. We can enable and start the systemd-networkd service to automatically configure the guest IP address for us.

machinectl login debian

vim /etc/systemd/network/80-container-host0.networkWith the following:

[Match]

Virtualization=container

Name=host0

[Network]

# Yout floating IP

Address=203.0.113.2/32

DHCP=no

LinkLocalAddressing=yes

LLDP=yes

EmitLLDP=customer-bridge

[Route]

# Your servers main IP

Gateway=203.0.113.1/32

GatewayOnLink=yes

[DHCP]

UseTimezone=yes

Now, when you reboot your server- the container is automatically started.

To apply addressing and gateway config without needing to use the ip command, reload the systemd daemon and restart systemd-networkd******

# Make sure you're still on the gues

machinectl login debian

# Apply the guest addressing:

systemctl daemon-reload

systemctl restart systemd-networkd

# Verify your gateway & route has been configured for you:

# Verify IP address

ip -c a

# Verify gateway

ip route show

# Verify can ping externally

ping 8.8.8.8

******I've most certainly got the systemd drop-in configuration process wrong here, the goal is for systemd to automatically pick up these settings without having to reload/restart systemd-networkd when the container starts. See systemd.network.html config.

We did it! What did we do exactly?

We've basically configured a lightweight container to have its own publicly rotatable IP address. Big deal. But wow

Let's revisit the goals:

-

Maximising frugality.

- ✅ CHECK this entire project raw costs were less than $5 (and I learnt a lot of valuable new insight into systemd-nspawn, networking and alike

-

Not sacrificed reachability

- every service must be directly contactable via its public ip address

- yes really, no SNAT/DNAT masquarding here

- ✅ CHECK we can publically reach and there's no SNAT/DNAT going on which prepares us for IPv6 nicely too

-

Reproducibility - In the 'DevOps' world we're very familiar with infrastructure as code (buzzword for: I can audit, change, and rebuild my application workloads easily if I have to). The same is useful for say, testing network topology generation

- Could do better, another person to follow this , and then codify coming soon!

Thoughts:

- We didn't have to pull in and install any (I'm struggling for words here) 'external' packages

- This is completely open source and learning purely on stable Linux kernel networking with minimal dependencies

Questions:

- How useful is this for you?

- How dependable is systemd-nspawn?

- How maintained is systemd-nspawn?

- How repeatable is this?

- We're using

proxy_arp, this likely means other containers will see, and may more easily answer other nodesarprequests, and that's a problem because it breaks down some isolation between the containers. To be tested to confirm.

What's next?

Doing the same, with IPv6!

Adding IPv6 support

At the beginning of this article we claimed that "IPV6 is the far more natural fit because when you buy/rent a server you typically get an IPv6 /60 allocated to you for free (cost savings) which gives you 295,147,905,179,352,825,856 globally routable addresses."

So let's see if that's true! Now we're going to to the same thing:

- Get a publicly (globally) routable

IPV6address to your nspawn container(s)

What are the benefits of this?

- Cost- we can trivially use our assigned

/60IPv6 assigned address to either carve out additional subnets (e.g. a subnet per customer) or, keep it simple and use the whole remaining/60range to give each nspawn container 1 publicly routableIPv6address (we'll start with that).

To achieve the above, much of the same concepts we used in IPv4 apply to IPv6 but with key differences:

- There's no Address Resolution Protocol (

arp) inIPv6, instead there's Neighbour Discovery for IP version 6 (IPv6) (see RFC 4861) tldr: This is the new way that nodes find the hardware address (Mac address) of other nodes) - Therefore there's no

proxy_arp, instead we need to proxy theICMPv6neighbour solicitations otherwise our containers won't be able to 'find' the link layer address of ourIPv6gateway.

IPv6 by comparison to IPv4 is very chatty and really wants to auto configure, auto announce and even auto migrate connections between networks. It's very cool if you're a networking nerd, and you'll only keep hearing more about it as time goes on- finally...

See IPv6 Only Web Services Becoming Commonplace

1 - Create a new server with an IPv6 block assigned

Create a new server (which gives you a /60 IPv6 address).

git clone https://github.com/KarmaComputing/nspawn-systemd-nspawn-containers.git

cd nspawn-systemd-nspawn-containers

A thing of beauty? Layers. Lots of them.

**** As you recall, the virtual Ethernet link vb-debian is created automatically for us at the host side by systemd-nspawn due to the Bridge=br0 setting we have in our /etc/systemd/nspawn/debian.nspawn config. systemd-nspawn also created the host0 interface for us on the guest side, connecting the two.

Helpful tools to debug routes

Get the default gateway using the ip command:

ip route show default

Show me all the routes in the routing table:

ip route show allShow me which route a packet would take using ip

This command gets a single route to a destination and prints its contents exactly as the kernel sees it.

- man ip route

For example, this shows using ip route get to show the route to the host ip address from the guests perspective.

# (Logged into the guest container)

ip route get 203.0.113.1

root@debian:~# ip route get 203.0.113.1

203.0.113.1 dev host0 src 203.0.113.2 uid 0

cache Even though we can ping from the container to the host, we cannot currently ping from the container to 8.8.8.8 or anywhere else external.

Notes on tcpdump how to filter by ip address src / dst

How to flush arp table using the ip command

(How to clear the ARP table using the ip command)

ip -s -s neigh flush allHow to filter out ip addresses from tcpdump

Example, put tcpdump in verbose mode, but filter out ip address 10.0.0.1 and 10.0.0.2.

tcpdump -vv -n 'not (dst net 10.0.0.1/32) and not (src net 10.0.0.2/32)'Further reading

- "nspawn isolation pottering" Pottering: Fitting Everything Together

- LWN.net discussion: https://lwn.net/Articles/894396/

Links & References

The general format of these links are "what I searched" -> Link to resource which was read to help deepen my understanding. Links visited during research & debugging.

Systemd systemd-nspawn Links & References

- systemd-nspawn — Spawn a command or OS in a light-weight container (freedesktop.org)

- systemd

.nspawnfiles systemd.nspawn — Container settings (freedesktop)

- "nspawn SetupAPTPartialDirectory (1: Operation not permitted)" [systemd-devel] Permission/updating problems; different behaviour of two identical nspawn containers

- The

SetupAPTPartialDirectorywas ultimately caused by me usingchroot /var/lib/machines/<machine-name> apt install tcpdumpwhilst debugging which evidently messes up apt cache (and probably other permissions.

- The

Proxy ARP

Note that forIPv6there is noarp, it's now called Neighbour Discovery Protocol which is part ofICMPv6. TheIPv4parts of this guide neededproxy_arpconfigured, and similarly forIPv6we need to proxyNDPcorrectly.

- https://web.archive.org/web/20151018073447/http://mailman.ds9a.nl/pipermail/lartc/2003q2/008315.html

- https://ixnfo.com/en/how-to-enable-or-disable-proxy-arp-on-linux.html

- "Proxy ARP cannot ping" No ping Proxy-ARP from LAN/DMZ (Juniper.net)

- "bridge not replying to pings" Linux pings from VM on virtual bridge get sent, but not returned (Stackoverflow.com)

- Linux Bridge (brctl) not passing the traffic (Stackexchange.com)

- "ebtables" ebtables/iptables interaction on a Linux-based bridge (ebtables.netfilter.org)

-

"proxy arp" -> "proxy_arp sysctl" Proxy ARP (linux-up.net) - a good explaination of what Proxy ARP is

-

"arp reply packet format"

- Address Resolution Protocol (ARP) New Paltz edu

-

"python send arp reply" (with this search I was scraping the barrel of my undetanding, since I hadn't yet understood

proxy_arp:- ARP responder using Python /scrapy

- "linux send arp broadcast" How to broadcast ARP update to all neighbors in Linux?

-

- Not directly related to containers, nor our approach (we're not using

MASQUERADEbut this post highlights that approach (we don't want to do masquerading because that would have undermined our goal of non-natted globally routable containers) makes things like socket activation easier because port numbers are now namespaced to each IP (k8s services also nicely abstracts this away for you, hidden origionally by complex iptable rules so that many different deployments could look at though they're all bound for port80, for example) the same is true here.

- Not directly related to containers, nor our approach (we're not using

-

"cannot ping nspawn container bridge" & "cannot ping nspawn container"

-

"man arp iface" Linux networking: arp versus ip neighbour

-

"send arp reply with linux"

Proxying IPv6 Neighbor Discovery

A thank you: Kevin P. Fleming's research and open source contributions were instrumental for understanding and debugging the nuances between

networkd,nspawnand the proxying required forIPv6neighbor discovery to work within the context of virtual ethernet links attached to a bridge.

Specifically highlighting thatip neigh show proxymay be used to verify anIPv6NDP proxy is configured or not to your bridge interface, and raised a pr for network: Delay addition of IPv6 Proxy NDP addresses which appears to be included in Debian 11 now.

-

"proxy_arp ipv6" How to convert proxy ARP setup to IPv6?. Note that the recomendation to use "ndppd" is a red herring for our use case, since we can achieve our goals within needing these daemons (see "NDP Proxy Daemon" in links below)

-

"nspawn guest neighbour solicitation" Why NDP doesn't work on virtual ethernet interface for packets arriving from outside? (serverfault.com)

-

"neighbor solicitation ipv6 destination" How to: IPv6 Neighbor Discovery (blog.apnic.net)

-

"IPv6ProxyNDP" "nspawn"IPv6 Proxy NDP addresses are being lost from interfaces after networkd adds them (Github.com - Fleming) -

"nspawn ipv6" (Kevin P. Fleming)

-

"how to answer an arp request" Traditional ARP

-

"ip neigh show proxy" ip neighbor (linux-ip.net)

-

"ipv6 multicast address" IPv6 Multicast Address Space Registry

-

[systemd-networkd] IPv6 Prefix Delegation not parsing IA_PD responses from upstream RAs

-

NDP proxy (Openstack)

-

"NDP" "nspawn" [systemd-devel] systemd-nspawn and IPv6

-

"NDP Proxy Daemon" and "nspawn" "ipv6"

- NS routing ndppd (Github) Ultimately not needed

- ndprbrd NDP Routing Bridge Daemon

- ndppd - NDP Proxy Daemon (ubuntu manuals)

- openwrt/odhcpd & [systemd-devel] systemd-nspawn and IPv6

- These above daemons are ultimately not needed since systemd (and kernel networking via

ip) can be configured usingipto add a proxy neighbor (e.g.ip neigh add proxy <IPv6 address> dev br0) but they give good context to theIPv6networking required

-

"man ip neigh" ip-neighbour(8) — Linux manual page

-

"linux ipv6 static neighbor" and "proxy neighbor solicitation" Manipulating neighbors table using ”ip”

tcpdump Related Links and References

- "man tcpdump -vvnr" https://www.tcpdump.org/manpages/tcpdump.1.html

- "tcpdump filter out ip" tcpdump filter that excludes private ip traffic

- "tcpdump ipv6" IPv6-ready test/debug programs (tldp.org)

- "tcpdump NDP" Capture of IPv6 advertisement message via tcpdump

ip command Related Links & References

- "ip route command returns file exists" ip route add returns "File exists"

- "ip link get promiscious mode" How to configure interface in “Promiscuous Mode” in CentOS/RHEL

- "ip route add" & "ip route add default" ip route add network command examples (cyberciti)

- "ip add default route" How to view or display Linux routing table on Linux (Cyberciti.biz)

- "ip route show gateway" How do i get the default gateway in LINUX given the destination? (Serverfault.com)

- "static route ipv6" How to configure IPv6 Static Route (networklessons.com)

- "ip neigh add proxy" ip neighbor

- See also warnings about using proxy arp: Breaking a network in two with proxy ARP and how to see manually specified proxy arp entries using ip neigh (which suggested

ip neigh add/deletewas being removed however we're now in 2023 and that has not happened. Caution. - "ip route add ipv6 default" Add an IPv6 route through an interface (tldp.org)

- "ip delete default gateway" proper syntax to delete default route for a particular interface?

- ip route del does not delete entire table

Linux Kernel Networking Links & References

- "ip link scope meaning" What does “scope” do in ip route and why it is necessary to setup static route in Linux? (Superuser.com)

- This post was particularly eluminating becayse at this point I hadn't realised that the way I was calling the

ipcommand wouldn't be showing my a complete view of the kernels routing tables

- This post was particularly eluminating becayse at this point I hadn't realised that the way I was calling the

- "hetzner bridged" && "Hetzner static" && "hetzner dns config debian"

- hetzner default nameservers not always resolving (Serverfault.com)

- Static IP configuration Hetzner (Hetzner)

- Routed (brouter) Hetzner

- "ssh keygen non interactive no password" Automated ssh-keygen without passphrase, how?

- "dig ipv6" (how to dig for

IPv6only A record (AAAA records) for a domain) tldr:dig example.com AAAAGet IPv4 and IPv6 with one command (stack overflow) - https://www.kernel.org/doc/Documentation/networking/policy-routing.txt

- "hbh icmp6" draft-ietf-6man-hbh-header-handling-03

- "what does two colons mean in ipv6 default gateway" What does [::] mean as an ip address? Bracket colon colon bracket

- "Error: either "to" is duplicate, or" ip route add -- Error: either "to" is duplicate, or "10.0.0.1" is a garbage

- "ip route add file exists" ip route add - RTNETLINK answers: File exists

Networkd systemd-networkd Links & References

- "poettering systemd new" [NYLUG Presents: Lennart Poettering -on- Systemd in 2018](https://www.youtube.com/watch?v=_obJr3a_2G8)

- https://systemd.network/systemd.network.html

- "cannot ping nspawn container bridge" Can't connect container with bridge to the internet using networkd

- "Debian networkd bridge" Bridging Network Connections (Debian.org)

- "debian systemd-networkd" Setting up Systemd-Networkd (Debian.org)

- "systemd networkd file" IPv6ProxyNDP IPv6 Neighbour Discovery Protocol Proxy NDP (freedesktop.org)

- "systemd VirtualEthernetExtra" systemd.nspawn — Container settings

- ""[Bridge]" systemd" Creating a bridge for virtual machines using systemd-networkd

- "RestartSec" (curious about

systemd's ability to auto restart services (aka Units) and timers). RestartSec= (freedesktop.org) - "systemd-networkd wait for bridge" systemd-networkd and Creating a bridge (wiki.archlinux.org)

- "since: 2020 why is systemd-networkd stopping Stopped Network Service" Systemd-networkd has to be manually restarted each boot (askubuntu.com)

- "systemd drop ins" https://flatcar-linux.org/docs/latest/setup/systemd/drop-in-units/

- "Gateway ipv6 neworkd" How to add static ipv6 route with systemd-networkd (stackexchange.com)

- "Networkd" systemd-networkd (archlinux)